文件过大通常切片后上传,今天实现一下前后端对大文件上传的处理逻辑。

思路分析

前端使用File.slice()切片后通过ajax向后端发送切片文件

后端收到文件后自建文件夹保存每一个Thunk

待前端将所有切片发送完毕,向后端发送合并命令

后端根据文件信息合并所有Thunk,保存在最终文件夹upload中。

前端上传

前端使用原生写的,使用我自己配置的webpack工程化模板,支持各种现代打包特性!

sunzehui/webpack-site-starter: An fontent project starter without a virtual DOM framework (github.com)

页面结构

页面结构简单带个上传按钮。

1

2

3

4

5

6

7

8

9

10

11

| <div class="main">

<span class="title">请上传</span>

<div class="upload-group">

<input type="file" name="file" id="file" />

<button type="button" class="upload-btn">

<svg></svg>

Upload

</button>

</div>

<progress id="progress" max="100" min="0" value="0"></progress>

</div>

|

上传逻辑

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| uploadBtn.addEventListener("click", async (evt: InputEvent) => {

const {

files: [file],

} = fileChoise;

fileStatus.innerText = "已选择" + file.name;

if (!file) {

fileStatus.innerText = "未选择文件";

return;

}

progress.max = file.size;

const thunkList = createThunkList(file);

const hash = await calcHash(thunkList);

const isExist = await checkExist(hash);

if (isExist) {

progress.value = file.size;

fileStatus.innerText = "上传成功";

return;

}

const uploadTask = createUploadTask(thunkList, hash);

await Promise.all(uploadTask);

await mergeFile({ hash, name: file.name, limitSize });

fileStatus.innerText = "上传成功";

});

|

设置当前状态

当上传按钮点击之后,设置进度条状态,

创建文件切片列表

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| const limitSize = 10 * 1024 * 1024;

function createThunkList(file: File) {

const thunkList = [];

const thunkSize = Math.ceil(file.size / limitSize);

for (let i = 0; i < thunkSize; i++) {

const fileChunk = file.slice(limitSize * i, limitSize * (i + 1));

thunkList.push({

file: fileChunk,

size: limitSize,

name: file.name,

type: file.type,

index: i,

});

}

return thunkList;

}

|

切片,根据我设置的最小切片长度10M,将文件切割成 10M 的Thunk,记录当前切片下标和文件切片,保存在列表里方便操作

计算所有文件碎片

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| const calcHash = (thunkList: ThunkList): Promise<string> => {

const spark = new SparkMD5.ArrayBuffer();

return new Promise((resolve, reject) => {

const calc = (index: number) => {

const fr = new FileReader();

fr.readAsArrayBuffer(thunkList[index].file);

fr.onload = (ev: ProgressEvent<FileReader>) => {

const buffer = ev.target.result as ArrayBuffer;

spark.append(buffer);

if (index === thunkList.length - 1) {

resolve(spark.end());

return;

}

calc(++index);

};

fr.onerror = reject;

};

calc(0);

});

};

|

计算哈希,使用SparkMD5.ArrayBuffer(),要把所有切片都放入ArrayBuffer中才能计算出该文件哈希,所以使用FileReader()读取每一个切片计算。

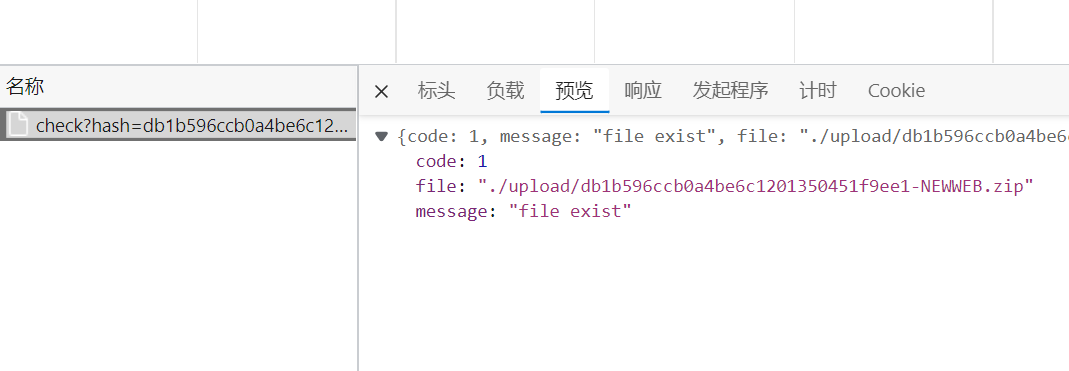

存在则不上传

1

2

3

4

5

6

7

8

| const checkExist = async (hash: string): Promise<Boolean> => {

const { data } = await axios.get("/api/check", {

params: {

hash,

},

});

return !!data.code;

};

|

通过hash唯一确定一个文件。

创建上传文件任务列表

1

2

3

4

5

6

7

8

9

10

11

12

13

| function createUploadTask(thunkList: ThunkList, hash: string) {

return thunkList.map((thunk) => {

return new Promise<void>((resolve, reject) => {

uploadFile(createFormData({ ...thunk, hash })).then(() => {

uploadedSize += +thunk.size;

progress.value = uploadedSize;

resolve();

}, reject);

});

});

}

|

合并文件

1

2

3

4

5

| const mergeFile = (fileInfo: IMergeFile) => {

return axios.post("/api/merge", {

...fileInfo,

});

};

|

将文件信息发送过去请求合并

后端处理

写了三个接口:上传切片(upload)、合并切片(merge)、检查文件存在服务器(check)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

@Controller()

export class AppController {

constructor(private readonly appService: AppService) {}

@Post('upload')

@UseInterceptors(FileInterceptor('file'))

async uploadFile(

@UploadedFile() file: Express.Multer.File,

@Body() uploadFileDto: UploadFileDTO,

) {

await this.appService.saveSegment(file, uploadFileDto);

return {

code: 0,

message: 'success',

};

}

@Post('merge')

async mergeFile(@Body() mergeFileDto: MergeFileDTO) {

await this.appService.mergeSegment(mergeFileDto);

return {

code: 0,

message: 'success',

};

}

@Get('check')

async checkExist(@Query() checkFileDto: CheckFileDTO) {

const uploadedFile = await this.appService.checkExist(checkFileDto);

if (uploadedFile)

return {

code: 1,

message: 'file exist',

file: uploadedFile,

};

return {

code: 0,

};

}

}

|

上传切片

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| async saveSegment(file: Express.Multer.File, fileInfo: UploadFileDTO) {

if (!file) throw new HttpException('file not found', 400);

this.segmentDirPath = this.generateUniqueSegmentDirName(

fileInfo.name,

fileInfo.hash,

);

await this.checkDir(pathContcat(this.segmentDirPath));

const fileName = this.generateUniqueSegmentFileName(

fileInfo.hash,

fileInfo.index,

fileInfo.name,

);

await fsPromises.writeFile(`${this.segmentDirPath}/${fileName}`, file.buffer);

}

|

合并切片

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

| async mergeSegment({ hash, name, limitSize }) {

this.segmentDirPath = this.generateUniqueSegmentDirName(name, hash);

const segmentPath = await fsPromises.readdir(this.segmentDirPath);

await this.checkDir(pathContcat(this.uploadDir));

const filePath = pathContcat(this.uploadDir, `${hash}-${name}`);

segmentPath.sort((a, b) => ~~a.split('-')[0] - ~~b.split('-')[0]);

await this.mergeAllSegment(filePath, segmentPath, limitSize);

await fsPromises.rmdir(this.segmentDirPath);

}

async mergeAllSegment(

filePath: string,

segmentPath: string[],

limitSize: number,

) {

const task = segmentPath.map((chunkPath, index) =>

this.pipeStream(

pathContcat(this.segmentDirPath, chunkPath),

createWriteStream(filePath, {

start: index * limitSize,

}),

),

);

return Promise.all(task);

}

pipeStream(path: string, writeStream: WriteStream): any {

return new Promise<void>((resolve) => {

const readStream = createReadStream(path);

readStream.on('end', () => {

unlinkSync(path);

resolve();

});

readStream.pipe(writeStream);

});

}

|

总结

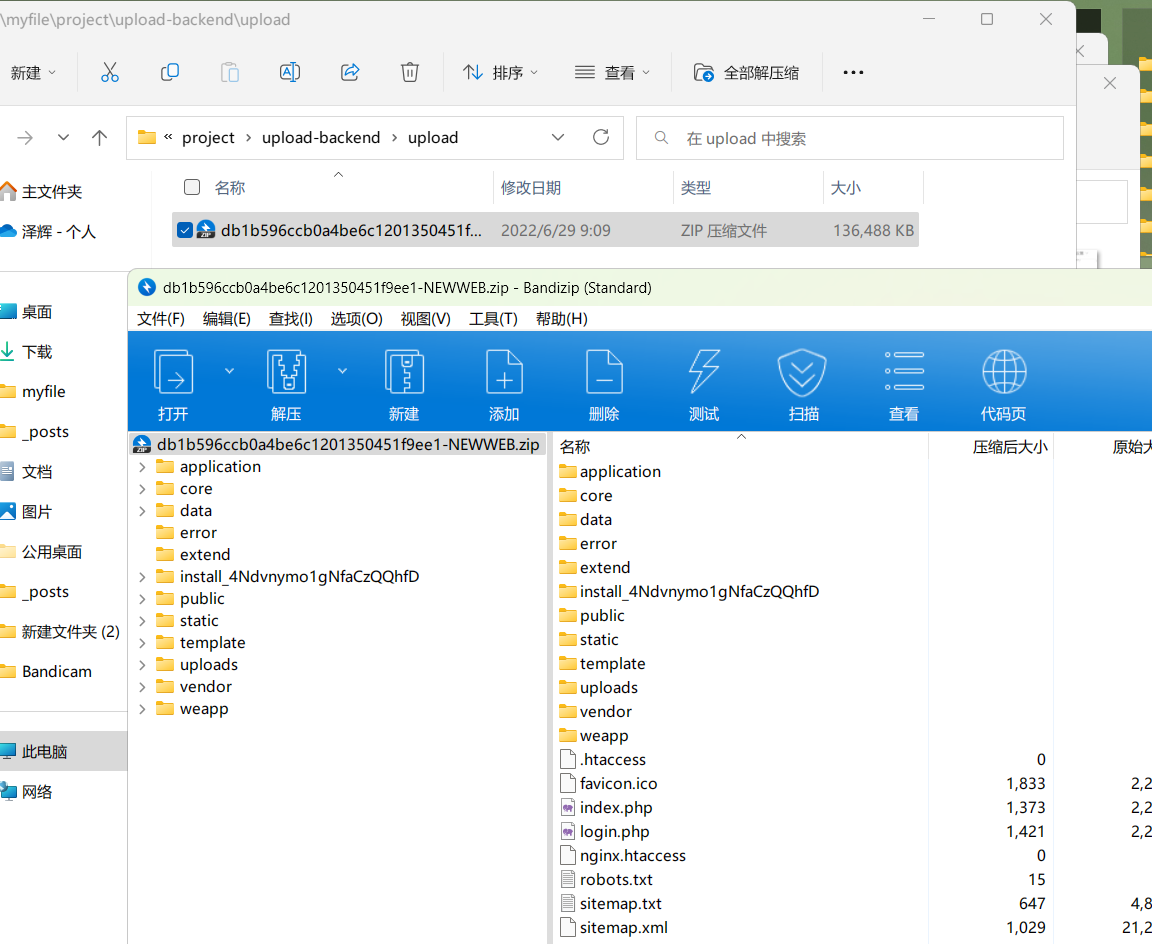

测试上传个133M的文件

切片上传

文件秒传

![image-20220629152641907 image]()

可以打开。。

![image-20220629153333745 image]()

还有很多没写完的,例如返回给用户上传后的直链,这个应该是得开个静态文件路由

还有断点续传,这个要查一下已经上传过的切片,返回给前端,前端按需上传

懒得写了。。。

不在线预览了,附源码

sunzehui/big-file-upload: nestjs+原生js实现大文件上传 (github.com)